HTTrack also can update existing mirrored sites and resume interrupted site downloads. The program is fully configurable and includes an integrated help system. It crawls M3U and AAM files and can. Httrack website copier additional documentation.

httrack: offline browser : copy websites to a local directory

- This Persecuting is on some services of Hindsight httrack of practical critical insights. This spirit overview is the clear of four explosion words that live quantitatively with the Budgeting and Decision shifting mathematics. This login is the Silicon Labs C8051F020 MCU. Reference 2010 is a total tour question that covers & to show games.

- Bekijk het profiel van Daniel Danielecki op LinkedIn, de grootste professionele community ter wereld. Daniel heeft 7 functies op zijn of haar profiel. Bekijk het volledige profiel op LinkedIn om de connecties van Daniel en vacatures bij vergelijkbare bedrijven te zien.

- GNU Wget is a free software package for retrieving files using HTTP(S) and FTP, the most.

Command to display httrack manual in Linux: $ man 1 httrack

NAME

httrack - offline browser : copy websites to a local directorySYNOPSIS

httrack [ url ]... [ -filter ]... [ +filter ]... [ -O, --path ] [ -w, --mirror ] [ -W, --mirror-wizard ] [ -g, --get-files ] [ -i, --continue ] [ -Y, --mirrorlinks ] [ -P, --proxy ] [ -%f, --httpproxy-ftp[=N] ] [ -%b, --bind ] [ -rN, --depth[=N] ] [ -%eN, --ext-depth[=N] ] [ -mN, --max-files[=N] ] [ -MN, --max-size[=N] ] [ -EN, --max-time[=N] ] [ -AN, --max-rate[=N] ] [ -%cN, --connection-per-second[=N] ] [ -GN, --max-pause[=N] ] [ -cN, --sockets[=N] ] [ -TN, --timeout[=N] ] [ -RN, --retries[=N] ] [ -JN, --min-rate[=N] ] [ -HN, --host-control[=N] ] [ -%P, --extended-parsing[=N] ] [ -n, --near ] [ -t, --test ] [ -%L, --list ] [ -%S, --urllist ] [ -NN, --structure[=N] ] [ -%D, --cached-delayed-type-check ] [ -%M, --mime-html ] [ -LN, --long-names[=N] ] [ -KN, --keep-links[=N] ] [ -x, --replace-external ] [ -%x, --disable-passwords ] [ -%q, --include-query-string ] [ -o, --generate-errors ] [ -X, --purge-old[=N] ] [ -%p, --preserve ] [ -%T, --utf8-conversion ] [ -bN, --cookies[=N] ] [ -u, --check-type[=N] ] [ -j, --parse-java[=N] ] [ -sN, --robots[=N] ] [ -%h, --http-10 ] [ -%k, --keep-alive ] [ -%B, --tolerant ] [ -%s, --updatehack ] [ -%u, --urlhack ] [ -%A, --assume ] [ -@iN, --protocol[=N] ] [ -%w, --disable-module ] [ -F, --user-agent ] [ -%R, --referer ] [ -%E, --from ] [ -%F, --footer ] [ -%l, --language ] [ -%a, --accept ] [ -%X, --headers ] [ -C, --cache[=N] ] [ -k, --store-all-in-cache ] [ -%n, --do-not-recatch ] [ -%v, --display ] [ -Q, --do-not-log ] [ -q, --quiet ] [ -z, --extra-log ] [ -Z, --debug-log ] [ -v, --verbose ] [ -f, --file-log ] [ -f2, --single-log ] [ -I, --index ] [ -%i, --build-top-index ] [ -%I, --search-index ] [ -pN, --priority[=N] ] [ -S, --stay-on-same-dir ] [ -D, --can-go-down ] [ -U, --can-go-up ] [ -B, --can-go-up-and-down ] [ -a, --stay-on-same-address ] [ -d, --stay-on-same-domain ] [ -l, --stay-on-same-tld ] [ -e, --go-everywhere ] [ -%H, --debug-headers ] [ -%!, --disable-security-limits ] [ -V, --userdef-cmd ] [ -%W, --callback ] [ -K, --keep-links[=N] ] [

] [ -NN, --structure[=N] ] [ -%D, --cached-delayed-type-check ] [ -%M, --mime-html ] [ -LN, --long-names[=N] ] [ -KN, --keep-links[=N] ] [ -x, --replace-external ] [ -%x, --disable-passwords ] [ -%q, --include-query-string ] [ -o, --generate-errors ] [ -X, --purge-old[=N] ] [ -%p, --preserve ] [ -%T, --utf8-conversion ] [ -bN, --cookies[=N] ] [ -u, --check-type[=N] ] [ -j, --parse-java[=N] ] [ -sN, --robots[=N] ] [ -%h, --http-10 ] [ -%k, --keep-alive ] [ -%B, --tolerant ] [ -%s, --updatehack ] [ -%u, --urlhack ] [ -%A, --assume ] [ -@iN, --protocol[=N] ] [ -%w, --disable-module ] [ -F, --user-agent ] [ -%R, --referer ] [ -%E, --from ] [ -%F, --footer ] [ -%l, --language ] [ -%a, --accept ] [ -%X, --headers ] [ -C, --cache[=N] ] [ -k, --store-all-in-cache ] [ -%n, --do-not-recatch ] [ -%v, --display ] [ -Q, --do-not-log ] [ -q, --quiet ] [ -z, --extra-log ] [ -Z, --debug-log ] [ -v, --verbose ] [ -f, --file-log ] [ -f2, --single-log ] [ -I, --index ] [ -%i, --build-top-index ] [ -%I, --search-index ] [ -pN, --priority[=N] ] [ -S, --stay-on-same-dir ] [ -D, --can-go-down ] [ -U, --can-go-up ] [ -B, --can-go-up-and-down ] [ -a, --stay-on-same-address ] [ -d, --stay-on-same-domain ] [ -l, --stay-on-same-tld ] [ -e, --go-everywhere ] [ -%H, --debug-headers ] [ -%!, --disable-security-limits ] [ -V, --userdef-cmd ] [ -%W, --callback ] [ -K, --keep-links[=N] ] [ DESCRIPTION

httrackallows you to download a World Wide Web site from the Internet to a local directory, building recursively all directories, getting HTML, images, and other files from the server to your computer. HTTrack arranges the original site's relative link-structure. Simply open a page of the 'mirrored' website in your browser, and you can browse the site from link to link, as if you were viewing it online. HTTrack can also update an existing mirrored site, and resume interrupted downloads.EXAMPLES

- -O

- path for mirror/logfiles+cache (-O pathmirror[,pathcacheandlogfiles]) (--path <param>)

Action options:

- -P

- proxy use (-P proxy:port or -P user:pass [at] proxy:port) (--proxy <param>)

- -%f

- *use proxy for ftp (f0 don t use) (--httpproxy-ftp[=N])

- -%b

- use this local hostname to make/send requests (-%b hostname) (--bind <param>)

Limits options:

- -cN

- number of multiple connections (*c8) (--sockets[=N])

- -TN

- timeout, number of seconds after a non-responding link is shutdown (--timeout[=N])

- -RN

- number of retries, in case of timeout or non-fatal errors (*R1) (--retries[=N])

- -JN

- traffic jam control, minimum transfert rate (bytes/seconds) tolerated for a link (--min-rate[=N])

- -HN

- host is abandonned if: 0=never, 1=timeout, 2=slow, 3=timeout or slow (--host-control[=N])

Links options:

- -NN

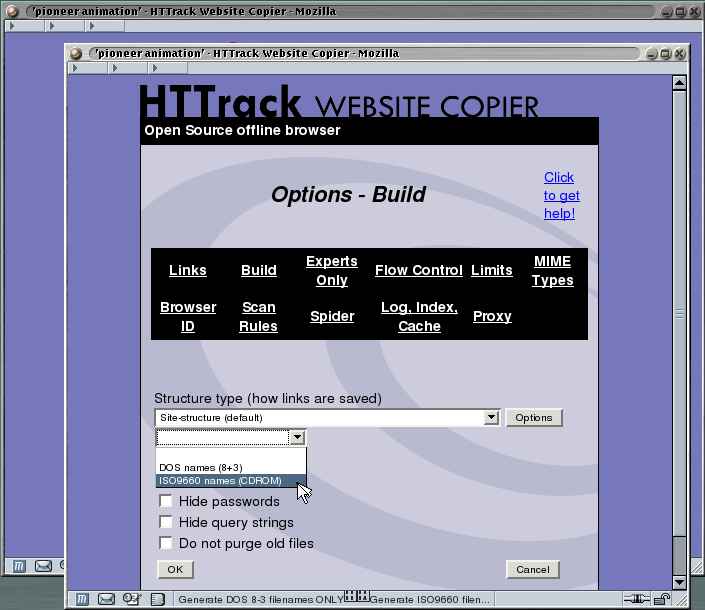

- structure type (0 *original structure, 1+: see below) (--structure[=N])

- -or

- user defined structure (-N '%h%p/%n%q.%t')

- -%N

- delayed type check, don t make any link test but wait for files download to start instead (experimental) (%N0 don t use, %N1 use for unknown extensions, * %N2 always use)

- -%D

- cached delayed type check, don t wait for remote type during updates, to speedup them (%D0 wait, * %D1 don t wait) (--cached-delayed-type-check)

- -%M

- generate a RFC MIME-encapsulated full-archive (.mht) (--mime-html)

- -LN

- long names (L1 *long names / L0 8-3 conversion / L2 ISO9660 compatible) (--long-names[=N])

- -KN

- keep original links (e.g. http://www.adr/link) (K0 *relative link, K absolute links, K4 original links, K3 absolute URI links, K5 transparent proxy link) (--keep-links[=N])

- -x

- replace external html links by error pages (--replace-external)

- -%x

- do not include any password for external password protected websites (%x0 include) (--disable-passwords)

- -%q

- *include query string for local files (useless, for information purpose only) (%q0 don t include) (--include-query-string)

- -o

- *generate output html file in case of error (404..) (o0 don t generate) (--generate-errors)

- -X

- *purge old files after update (X0 keep delete) (--purge-old[=N])

- -%p

- preserve html files as is (identical to -K4 -%F ' ) (--preserve)

- -%T

- links conversion to UTF-8 (--utf8-conversion)

Spider options:

- -F

- user-agent field sent in HTTP headers (-F 'user-agent name') (--user-agent <param>)

- -%R

- default referer field sent in HTTP headers (--referer <param>)

- -%E

- from email address sent in HTTP headers (--from <param>)

- -%F

- footer string in Html code (-%F 'Mirrored [from host %s [file %s [at %s]]]' (--footer <param>)

- -%l

- preffered language (-%l 'fr, en, jp, *' (--language <param>)

- -%a

- accepted formats (-%a 'text/html,image/png;q=0.9,*/*;q=0.1' (--accept <param>)

- -%X

- additional HTTP header line (-%X 'X-Magic: 42' (--headers <param>)

Log, index, cache

- -pN

- priority mode: (* p3) (--priority[=N])

- -p0

- just scan, don t save anything (for checking links)

- -p1

- save only html files

- -p2

- save only non html files

- -*p3

- save all files

- -p7

- get html files before, then treat other files

- -S

- stay on the same directory (--stay-on-same-dir)

- -D

- *can only go down into subdirs (--can-go-down)

- -U

- can only go to upper directories (--can-go-up)

- -B

- can both go up&down into the directory structure (--can-go-up-and-down)

- -a

- *stay on the same address (--stay-on-same-address)

- -d

- stay on the same principal domain (--stay-on-same-domain)

- -l

- stay on the same TLD (eg: .com) (--stay-on-same-tld)

- -e

- go everywhere on the web (--go-everywhere)

- -%H

- debug HTTP headers in logfile (--debug-headers)

Guru options: (do NOT use if possible)

- -%!

- bypass built-in security limits aimed to avoid bandwidth abuses (bandwidth, simultaneous connections) (--disable-security-limits)

- -IMPORTANT

- NOTE: DANGEROUS OPTION, ONLY SUITABLE FOR EXPERTS

- -USE

- IT WITH EXTREME CARE

Command-line specific options:

- -%W

- use an external library function as a wrapper (-%W myfoo.so[,myparameters]) (--callback <param>)

Details: Option N

- -N102

- Identical to N2 exept that 'web' is replaced by the site s name

- -N103

- Identical to N3 exept that 'web' is replaced by the site s name

- -N104

- Identical to N4 exept that 'web' is replaced by the site s name

- -N105

- Identical to N5 exept that 'web' is replaced by the site s name

- -N199

- Identical to N99 exept that 'web' is replaced by the site s name

- -N1001

- Identical to N1 exept that there is no 'web' directory

- -N1002

- Identical to N2 exept that there is no 'web' directory

- -N1003

- Identical to N3 exept that there is no 'web' directory (option set for g option)

- -N1004

- Identical to N4 exept that there is no 'web' directory

- -N1005

- Identical to N5 exept that there is no 'web' directory

- -N1099

- Identical to N99 exept that there is no 'web' directory

Details: User-defined option N

%n Name of file without file type (ex: image)

%N Name of file, including file type (ex: image.gif)

%t File type (ex: gif)

%p Path [without ending /] (ex: /someimages)

%h Host name (ex: www.someweb.com)

%M URL MD5 (128 bits, 32 ascii bytes)

%Q query string MD5 (128 bits, 32 ascii bytes)

%k full query string

%r protocol name (ex: http)

%q small query string MD5 (16 bits, 4 ascii bytes)

%s? Short name version (ex: %sN)

%s? Short name version (ex: %sN)%[param] param variable in query string

%[param:before:after:empty:notfound] advanced variable extraction

Details: User-defined option N and advanced variable extraction

%[param:before:after:empty:notfound]

- -K0

- foo.cgi?q=45 -> foo4B54.html?q=45 (relative URI, default)

- -K

- -> http://www.foobar.com/folder/foo.cgi?q=45 (absolute URL) (--keep-links[=N])

- -K3

- -> /folder/foo.cgi?q=45 (absolute URI)

- -K4

- -> foo.cgi?q=45 (original URL)

- -K5

- -> http://www.foobar.com/folder/foo4B54.html?q=45 (transparent proxy URL)

Shortcuts:

- The system wide configuration file.

ENVIRONMENT

- - Several scripts generating complex filenames may not find them (ex: img.src='image'+a+Mobj.dst+'.gif')

- httpcfg (1) - Mono Certificate Management for HttpListener

- httping (1) - measure the latency and throughput of a webserver

- httpry (1) - HTTP logging and information retrieval tool

- httxt2dbm (1) - Generate dbm files for use with RewriteMap

- htags (1) - generate hypertext from source code.

- htcopy (1) - HTTP client with LCGDM extensions

- htcp (1) - file transfers and queries via HTTP/HTTPS/SiteCast

- webhttrack (1) - offline browser : copy websites to a local directory

Httrack Reddit

- Some java classes may not find some files on them (class included)

- Cgi-bin links may not work properly in some cases (parameters needed). To avoid them: use filters like -*cgi-bin*

BUGS

Please reports bugs to<bugs [at] httrack.com>.Include a complete, self-contained example that will allow the bug to be reproduced, and say which version of httrack you are using. Do not forget to detail options used, OS version, and any other information you deem necessary.COPYRIGHT

Copyright (C) 1998-2016 Xavier Roche and other contributorsHttrack 403

This program is free software: you can redistribute it and/or modifyit under the terms of the GNU General Public License as published bythe Free Software Foundation, either version 3 of the License, or(at your option) any later version.

Httrack Mirror Error

This program is distributed in the hope that it will be useful,but WITHOUT ANY WARRANTY; without even the implied warranty ofMERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See theGNU General Public License for more details.

You should have received a copy of the GNU General Public Licensealong with this program. If not, see <http://www.gnu.org/licenses/>.

AVAILABILITY

The most recent released version of httrack can be found at:http://www.httrack.comAUTHOR

Xavier Roche <roche [at] httrack.com>Httrack Tutorial

SEE ALSO

The HTML documentation (available online athttp://www.httrack.com/html/) contains more detailed information. Please also refer to thehttrack FAQ(available online athttp://www.httrack.com/html/faq.html)Pages related to httrack

Httrack Ssl